Infinite Knowledge Yet No Breakthroughs

Isn't It Odd That Our Best AI Models Haven't Discovered Anything New (Yet)?

Dario Amodei, CEO of Anthropic, was recently asked, "When will we reach AGI?" To which he responded, "...AGI as a model that can do everything a human can do, even at the level of a Nobel laureate, is expected to be achieved in 2026-27." And for what its worth, he’s not the only one who believes we’ll reach AGI before the end of the decade.

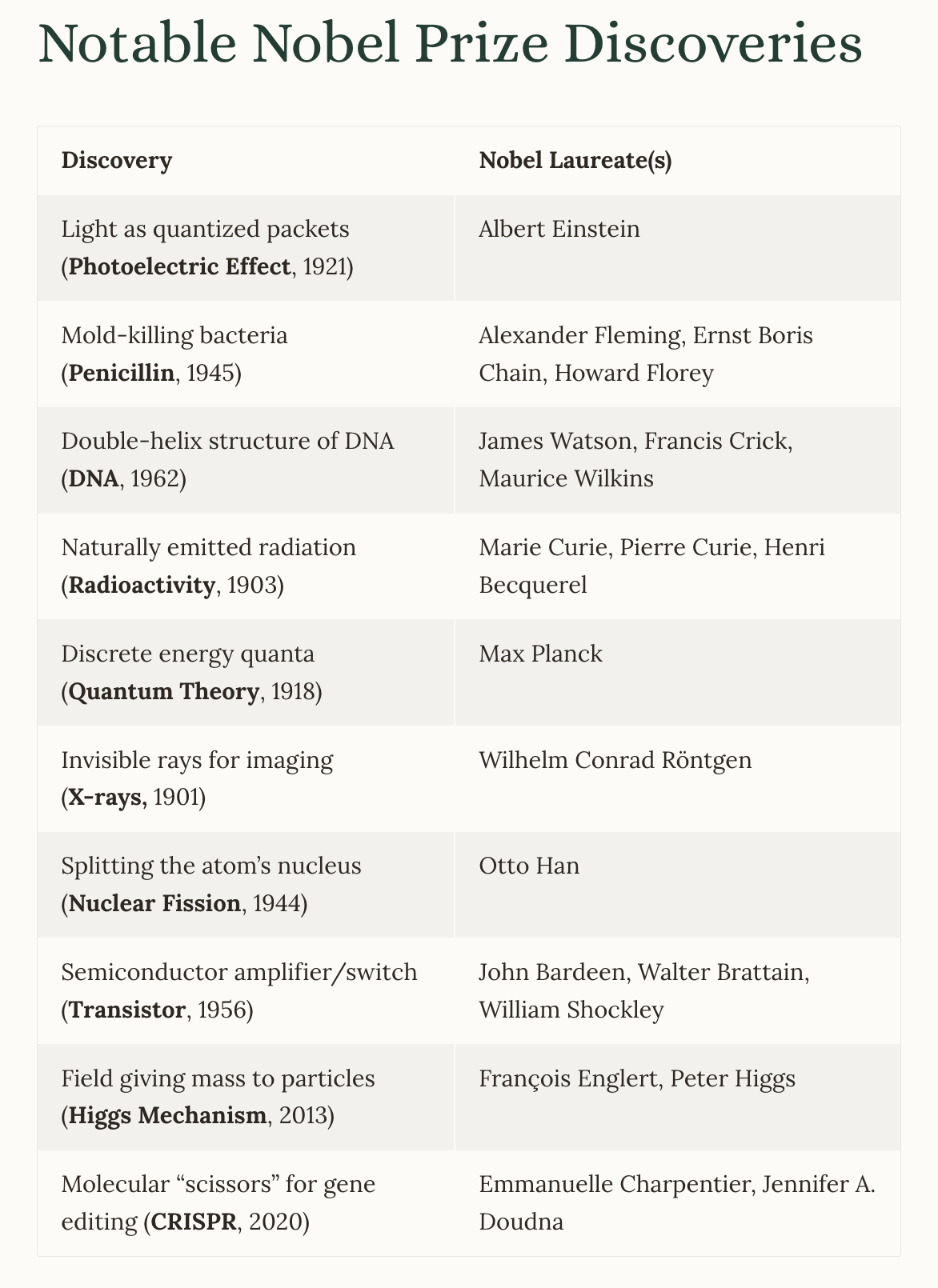

If you’re not awestruck by that statement, here’s a brief sampling of past Nobel laureates, their discoveries, and how their work has impacted our fundamental understanding of the universe and our way of life.

Just these ten Nobel Prize breakthroughs—spanning antibiotics, DNA structure, quantum theory, nuclear fission, the transistor, and more—have revolutionized medicine, technology, and our fundamental grasp of nature. Each innovation opened doors to new industries and scientific frontiers, redefining how we live, work, and comprehend the world today.

So when Dario says we’re going to have a Nobel-worthy AI in the next two years, I get incredibly excited for the mysteries of the universe we’re about to solve. We’re talking about AI that has effectively “read” the entire corpus of human knowledge. The dream is that—armed with more information than any one person could ever handle—AI would connect the dots nobody has thought to connect before.

Spoiler alert: That hasn’t happened yet. Despite the big leaps AI has made—AlphaFold solving protein structures, exoplanets discovered in NASA data, chatbots spouting off as if they’re the authority on everything—no major new scientific principle or cosmic truth has come from these “all-knowing” systems.

So how is it that AI can be so brilliant at existing knowledge yet so unhelpful in forging brand-new discoveries? Isn’t it odd that…

…these things have basically the entire corpus of human knowledge memorized and they haven't been able to make a single new connection that has led to a discovery? Whereas if even a moderately intelligent person had this much stuff memorized, they would notice — Oh, this thing causes this symptom. This other thing also causes this symptom. There's a medical cure right here. Shouldn't we be expecting that kind of stuff? - Dwarkesh Patel

The Grand Promise of AGI

OpenAI. Anthropic. Meta. X. You’ve seen the headlines and read the mission statements—everyone’s building something that will “understand the universe” and push humanity forward. The story goes that if we just scale up the model size, feed more data, and fine-tune more parameters, then viola…

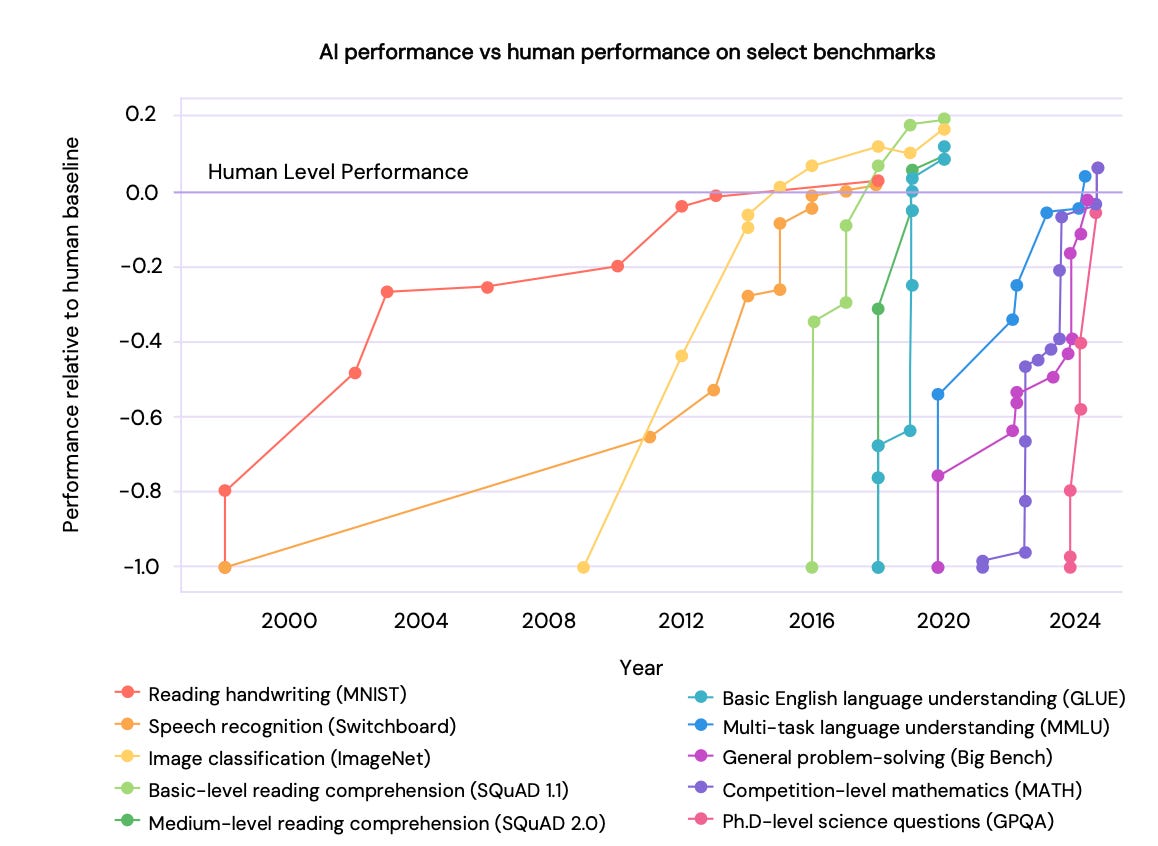

But we’re still waiting. While AI has definitely made our jaws drop with creativity in art, speech, deep fakes, and comedic memes, it hasn’t delivered that grand discovery that fundamentally shakes how we see reality. Gravity? Still 17th-century Newton. Quantum mechanics? Early 1900s. Relativity? Einstein 1915. We can feed AI every physics paper ever written, yet it’s not coughing up a unifying quantum gravity theory. And, yes, I know we’re still a few years away from AGI, but these models are already acing tests at or above the human levels in reading, comprehension, math, and science…

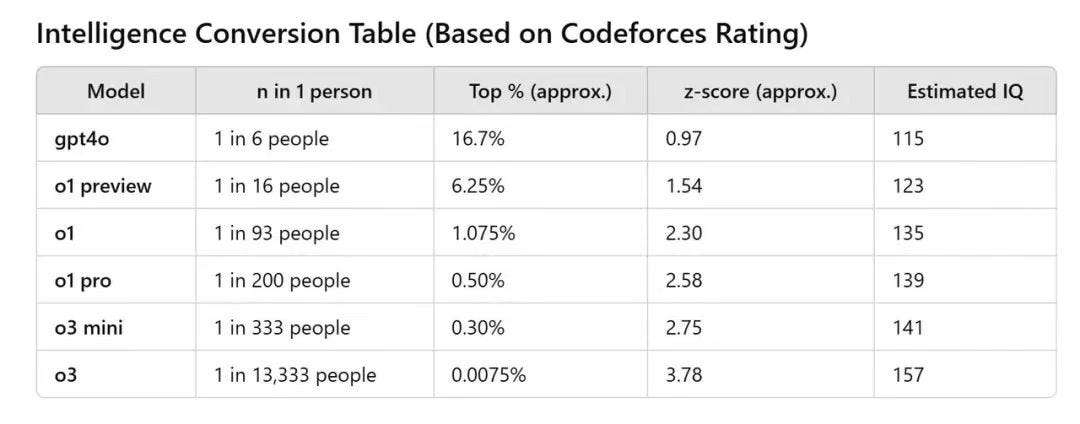

…and their IQ is above 140+ and well into genius territory.

So why are we not seeing any early signs of ‘genius’ emerge yet? Modern AI systems, such as large language models (think of tools like ChatGPT or Grok), are trained on massive amounts of text from the Internet, books, and other sources. This means they’ve "memorized" or stored a huge amount of human knowledge—facts, ideas, and patterns in language.

But here’s the problem: even though these AIs have all this information, they don’t seem to make new, creative connections or discoveries the way humans do. For example, a moderately smart person, if they knew all the same facts, might look at two unrelated symptoms (say, tiredness and headaches) and realize, "Hey, maybe these are caused by the same thing, like dehydration—and there’s a simple fix!" But AIs aren’t doing this.

The Unsolved Mysteries (Still Unsolved)

An AI, showing early signs of genius, and one that’s on its way to AGI, would start to make meaningful dents into some of the universe’s big mysteries:

Physics & the Deep Cosmic Unknowns

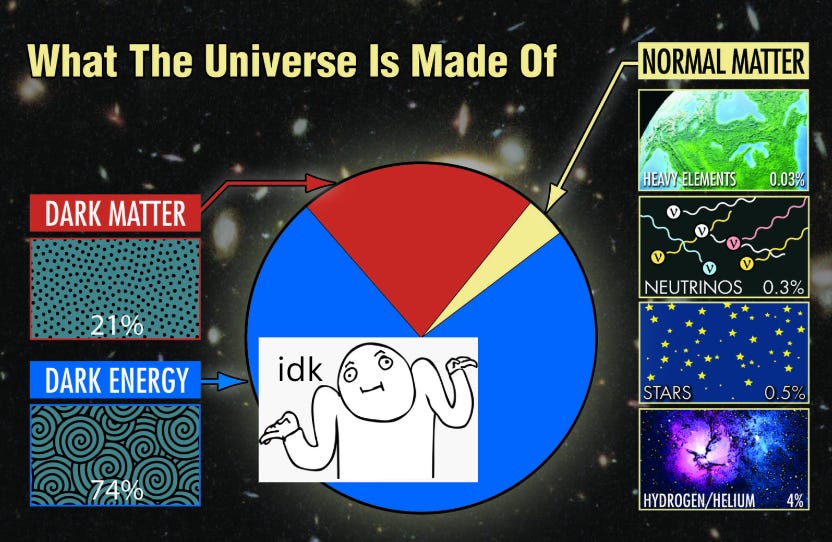

Dark Matter and Dark Energy: ~95% of the universe is… something. We label it “dark” because we basically have zero clue. AI has combed through cosmic data, sniffing for gravitational wobbles or strange lensing patterns. Still no “Eureka!” moment that says, “You see that spike in the data? That’s dark matter, right there.”

Quantum Gravity: We have two brilliant theories (quantum mechanics for tiny stuff, general relativity for the big stuff) that refuse to dance together. AI can help analyze monstrous piles of collider data, but it hasn’t bridged the conceptual gap that keeps them separate. The “Theory of Everything” is still a pipe dream. Sorry, Stephen.

Biology & the Origins of Life

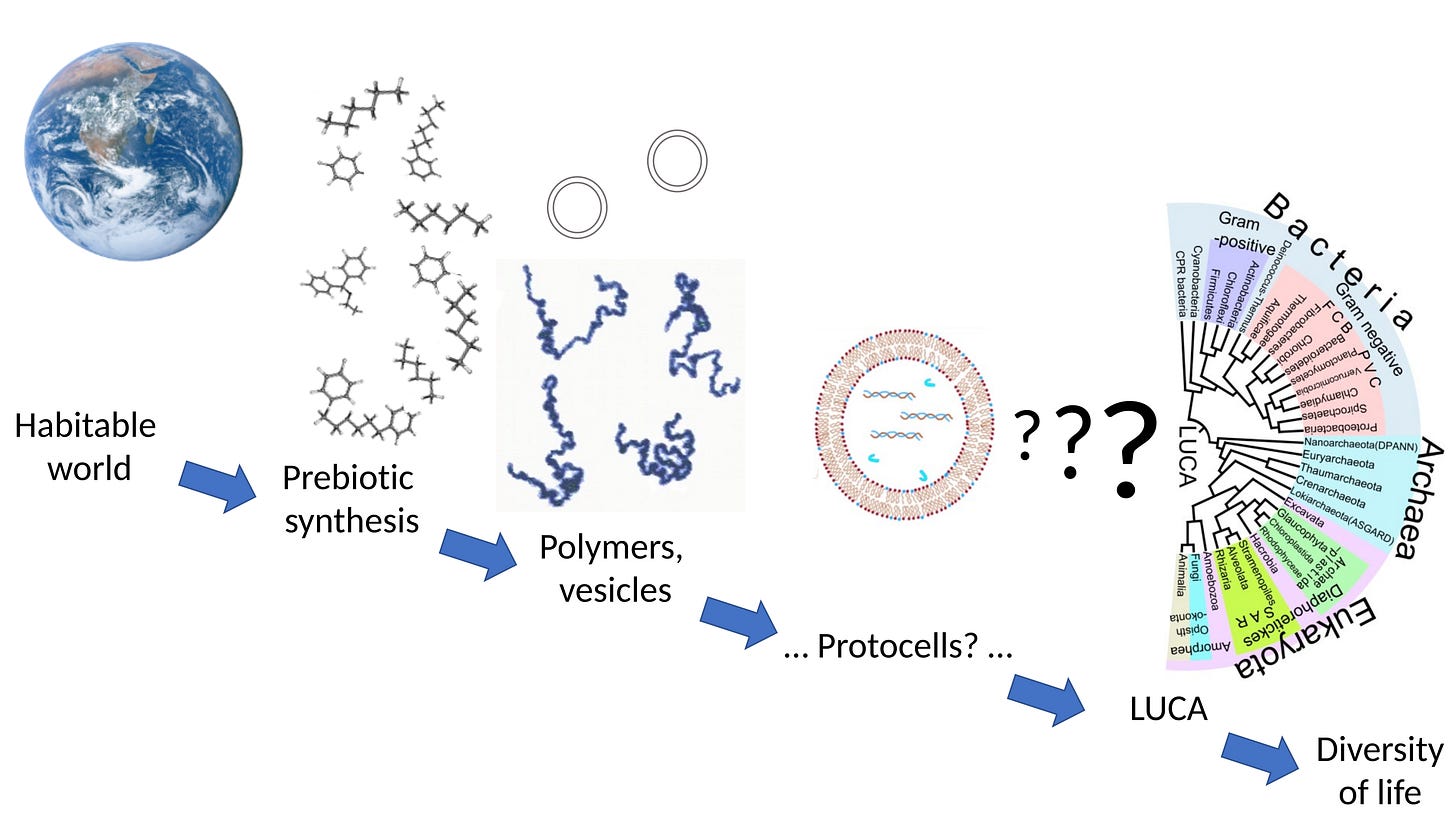

Abiogenesis: We know life sprang from non-living matter on Earth ~3.5+ billion years ago. We just don’t know how. AI can run fancy simulations, but we still haven’t pinned down that moment the first molecules turned themselves into life.

Consciousness: The “hard problem”—why do neural circuits produce subjective experience? We have absolutely no widely accepted explanation, and AI’s mimicry of intelligence hasn’t changed that. (If an AI says it “feels feelings,” do we believe it? We have no test or measure.)

Chemistry & Mystery Molecules

Homochirality: Many biological molecules come in “left-handed” and “right-handed” forms, yet life on Earth mostly uses just one form. For instance, amino acids (the building blocks of proteins) are almost all “left-handed.” This preference is what scientists call homochirality—and the big mystery is why nature chose a single “hand” in the first place. No one knows exactly how that choice originated, but it’s crucial for life as we know it. Why? AI can do a million reaction simulations, but it hasn’t given a firm answer as to why nature is so chirally picky.

Superconductivity: We’d love a room-temperature superconductor. AI can guess new materials, but we still rely on human-led trial and error. The theoretical underpinnings remain murky.

Mathematics

P vs NP: This problem has been around for half a century, taunting computer scientists. “Can every problem whose solution is easy to check also be easy to solve?” Imagine you have a puzzle: once you have a potential solution, it only takes a moment to see if it’s correct—but it might take a very long time to figure out that solution in the first place. The P vs NP problem asks whether there’s a shortcut that lets you solve these puzzles just as fast as you can check them. So far, no AI (or human) has delivered a definitive proof that this is possible. The Clay Mathematics Institute still has $1 million waiting for anyone who cracks it—AI included.

Riemann Hypothesis: Proposed in 1859, it’s about the distribution of prime numbers. Think of prime numbers (like 2, 3, 5, 7...) as the "atoms" of numbers, because every bigger number can be built from them. The Riemann Hypothesis suggests there’s a secret pattern to where these primes show up on the number line. Mathematicians have a special “tool” (the Riemann zeta function) to study this pattern. The hypothesis says if you look at all the important points where this tool outputs zero, they’ll all line up in a straight line. Proving this would confirm a neat rhythm to prime numbers, but so far, no one has managed to show it’s definitely true (or false). Billions of computational checks have supported it. No proof has emerged. AI can churn through patterns, but the logical leap to why it’s true (or not) remains elusive.

Sure, AI has helped. It’s sorted vast data sets, identified exoplanets in old NASA records, predicted protein structures with AlphaFold, and even discovered new antibiotics. But in each case, it’s capitalizing on existing frameworks or data sets—running deeper, faster analyses we humans just don’t have the patience or time to do. The real epiphany moments, those “let’s rewrite the textbooks” leaps, have yet to materialize. If you’re like me and also wondering: “Wait, so we feed AI all human knowledge and it can’t spin up a brand-new concept? Why not? AI researchers don’t really know.

I don't really have an answer to that. It seems certainly like memorization and facts and drawing connections is an area where the models are ahead. And I do think maybe you need those connections and you need a fairly high level of skill…and so I do think the models know a lot of things and they have a skill level that's not quite high enough to put them together.

- Dario Amodei, CEO Anthropic in November 2023

Other researchers, like Eric Michaud, have suggested two possibilities:

Reason 1: There’s a Fundamental Limitation - Imagine an AI is like a library with millions of books (all the data it’s trained on). But even with all those books, the library’s "shelves" (the way it’s designed) might not let it organize or connect the information in a way that leads to new ideas. For example, a big fundamental tech behind models like ChatGPT, is called ‘transformers’. These transformers are great at predicting the next word in a sentence or answering questions, but they might not be flexible enough to "think" like a human and spot new patterns or connections. If this is true, no amount of scaling (making the AI bigger or adding more data) will fix it—we’d need a totally new design or approach for the AI.

Reason 2: Scaling Might Work, But It Takes Time - Now imagine the AI is like a student cramming for a test. At first, the student memorizes facts (like dates or vocabulary) because that’s the easiest way to do well on the test. Later, as they get smarter or have more time, they start understanding bigger ideas and making connections between topics. Eric suggests that for AIs, learning simple stuff (like word patterns or basic facts) might be easier and more "rewarding" for the system than learning complex, creative thinking. The AI’s training process is designed to minimize "loss" (basically, how often it gets things wrong), and it might focus on learning lots of small, dumb things (like common phrases) instead of big, smart ideas (like solving a medical mystery). But as we scale up—make the AI bigger, give it more data, and use more powerful computers—it might eventually start learning those smarter, more creative skills. It’s just taking longer because there’s so much "easy" stuff to learn first.

But Reason #2 is solvable. Doesn’t that mean we just need more data?” And like $500 billion dollars?

Possibly. But it might not be that simple. Let’s look to advice from one of the greatest thinkers of all time, Albert Einstein.

Creativity & Rule-Breaking: There’s a theory that big breakthroughs happen when you break the known rules. Einstein challenged Newtonian assumptions. The folks who discovered quantum mechanics basically said, “Classical logic, meet the door.” That rebellious spark—the willingness to discard the status quo—is something AI might not do on its own, especially because it’s built on patterns extracted from existing data. AI is usually trained to solve our problems in our way, constrained by the frameworks we give it. If the real solution lies outside our conceptual frameworks, we (and our AIs) might not see it. It’s like searching for your keys only under the streetlamp because that’s where the light is—meanwhile, the keys might be lost in a dark alley we’ve never walked down. When everything is about pattern recognition, stepping outside the pattern is ironically the biggest challenge.

Human-Level Insight: Real breakthroughs often fuse logic with leaps of intuition or weird hunches that defy straightforward analysis. We interpret ambiguous signals, we trust gut feelings, we get bored and daydream an outlandish idea. Can you program that? Maybe someday. Right now, it’s neither trivial nor guaranteed that AI can replicate that intangible spark.

Gaps in the Data: Some mysteries require brand-new experiments or phenomena we’ve never directly observed. AI can’t conjure data that doesn’t exist. Dark matter, for instance, might require capturing a specific particle in a lab or noticing a bizarre cosmic event we haven’t recorded yet. No matter how good you are at pattern recognition, you can’t find a pattern in nothing.

So Are We Losing Our Jobs to AGI in 2 years?

I’ve come to terms that it’s okay to have conflicting feelings about AGI. Optimism, skepticism, curiosity, fear, hope—they all coexist in my head. We are in genuinely uncharted territory. If something drastically changes in two years—some emergent property of these giant models that we can’t predict—and we wake up to an AI that spontaneously solves P vs NP, well, great! I’ll be the first in line cheering. But we can’t count on that until it happens.

The biggest fear might be that once we do crack AGI, it could become unstoppable, rendering our contributions obsolete. Or maybe the biggest fear is that we’ll never actually reach the “holy grail” of an AI that truly understands. We’re stuck in an intermediate loop of super-smart pattern extractors, good enough to alter the job market, but not enough to solve the cosmic riddles.

My best guess is that future breakthroughs will come from a human–AI tag team. These big scientific mysteries show there’s a limit to what AI currently does best. The hardest, most fundamental leaps still require that unpredictable human touch. So maybe we haven’t worked ourselves out of a job entirely—yet. And if AGI does arrive tomorrow, maybe our true value as humans will persist for just a bit longer, because even advanced AI might need us to stage the creative leaps or question established logic. If we’re wise, we’ll collaborate. Human–AI synergy could be a potent formula for future breakthroughs, with AI doing the heavy lifting in data-crunching, and humans injecting the wacky, rebellious leaps of intuition (plus the nerve to break from tradition).

Computers are incredibly fast, accurate, and stupid; humans are incredibly slow, inaccurate, and brilliant; together they are powerful beyond imagination” - Einstein (again!).

Either way, it’s a ride—and we can still add “productive value,” at least for a bit longer, because if the universe’s top secrets were purely a matter of brute force and bigger data sets, AI would’ve already dropped them on our doorstep.