Did Claude Cowork Break the Knowledge Worker Career Ladder?

What Claude Cowork Means for My Career (And My Kids')

I have three kids.

When I think about their futures, I do what every parent does: I project. I picture them landing that first job, building a career, getting that first promotion. The pride on their faces when they’re good at something valuable.

I’m a management consultant. I’ve spent my career in knowledge work—synthesizing research, building decks, distilling complexity into executive-ready insights. It’s the kind of work that’s been a golden ticket for decades: prestigious, well-compensated, the thing you tell your kids to aspire to.

This week, Anthropic launched Claude Cowork. It’s making me rethinking my career.

And more importantly, I can’t stop thinking about my kids’.

What Just Happened

Earlier this week, Anthropic released Claude Cowork. It’s an AI agent that lives on your computer, accesses your files, and just... does things. Point it at receipt screenshots, ask for an expense report and its done. Point it at scattered notes, ask for a polished report and its done. No coding. Plain English.

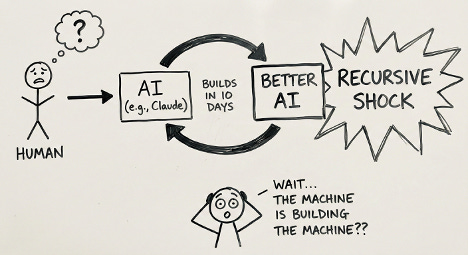

Here’s what’s especially wild: Anthropic built this in 10 days. Using their own AI. Which is mostly written by itself.

The recursive loop isn’t theoretical anymore. AI is building AI.

And the things it’s building…their Cowork product? They do the work I’ve spent 15 years learning to do. If Cowork has mass adoption, which I suspect it will, we will see that…

…My Career Ladder Will Break

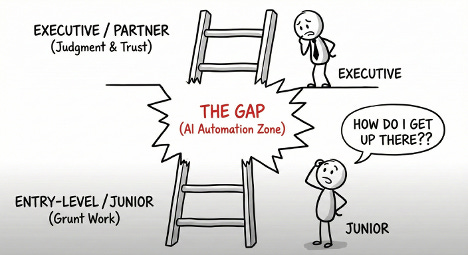

Here’s what the data shows and I wish it weren’t this stark.

Gartner predicts that by 2026, 20% of companies will use AI to flatten their hierarchies, cutting over half their mid-tier roles. Revelio Labs already reports a 40% drop in middle-management job postings since 2022.

The metaphor everyone’s using is “the hourglass workforce.” Picture the old corporate pyramid was lots of juniors at the bottom, fewer managers in the middle, executives at the top. Now squeeze the middle until it nearly vanishes.

Heavy at junior levels (cheap, AI-fluent operators). Heavy at senior levels (judgment, relationships, accountability). Almost nothing in between.

The middle is where consulting analysts become senior associates. Where associates become engagement managers. Where you learn the craft. AI is eating that rung. And breaking the long established career ladder.

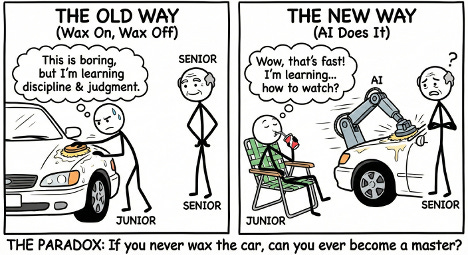

The Karate Kid Problem

I loved watching The Karate Kid. Daniel-san spends weeks waxing Mr. Miyagi’s car, painting his fence, sanding his floor. Boring, tedious work. But through those repetitive motions, he’s actually learning the fundamentals of karate without realizing it.

That’s how expertise used to work in consulting. You built 500 slides. You learned what makes a good slide. You sat in thousands of client meetings. You absorbed how to read a room. The grunt work was the training.

Now imagine AI does all the waxing. The junior sits in a lawn chair, sipping a drink, watching. Sure, the car gets waxed faster. But here’s the question that keeps me up at night: If you never wax the car, can you ever become a master?

What Still Gets Paid

So what survives? London Business School published a piece that nails it: “AI doesn’t do judgement.”

Judgment is what you exercise when facing situations without precedent—significant complexity, new variables, unusual trade-offs, insufficient data, convoluted qualitative factors, multidimensional risk, and the nuances of personality.

Translated into consulting terms: AI can build the slide. AI cannot know whether the slide should exist. AI can synthesize research. AI cannot sense that the CFO secretly hates the recommendation and you need to address her unstated concerns. AI can analyze the data. AI cannot navigate the boardroom politics that determine whether anything actually gets implemented.

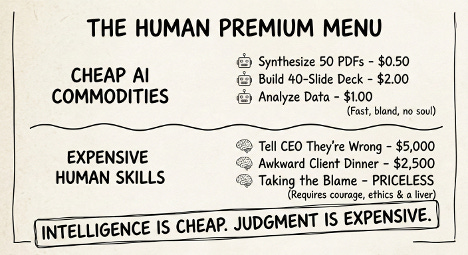

The shift is simple: Intelligence is now cheap. Judgment is still expensive. And if my kids want to pursue a future career, they’ll have to consider the new human premium ‘menu’.

There will continue to exist future careers built on:

Relationships. AI can’t have dinner with the client. Can’t be the person the CEO calls at midnight when the board is about to fire them. Trust is built human-to-human.

Judgment under ambiguity. When the data is incomplete, the situation is novel, and the stakes are high. AI trained on historical patterns struggles with genuine novelty.

Accountability. When something goes wrong, someone has to be responsible. AI can recommend. Humans bear consequences.

Taste. What should we build? For whom? Why this and not that? AI optimizes. Humans decide what’s worth optimizing for.

Physical presence in unpredictable environments. Skilled trades—plumbers, electricians, healthcare workers—remain largely insulated. The real world is messy in ways that break automation…for now

What I’m Telling My Kids (Tentatively)

I used to think the advice was simple: get good grades, go to a good school, get a job, climb the ladder.

Now I’m less sure. The ladder itself is being disassembled.

The new advice might be something like: develop skills that require context AI can’t access, build relationships AI can’t have, cultivate judgment that only comes from navigating real-world ambiguity.

Or, frankly: learn to be a plumber. I say that half-jokingly, but the data supports it.